‘Blog’

Technical Writing and its ‘Hierarchy of Needs’

Wednesday, February 2nd, 2022

Technical writing is hard to do well and it’s also a bit different from other types of writing. While good technical writing has no strict definition I do think there is a kind of ‘hierarchy of needs’ that defines it. I’m not sure this is complete or perfect but I find categorizing to be useful.

L1 – Writing Clearly

The author writes in a way that accurately represents the information they want to convey. Sentences have a clear purpose. The structure of the text flows from point to point.

L2 – Explaining Well (Logos in rhetorical theory)

The author breaks their argument down into logical blocks that build on one another to make complex ideas easier to understand. When done well, this almost always involves (a) short, inline examples to ground abstract ideas and (b) a concise and logical flow through the argument which does not repeat other than for grammatical effect or flip-flop between points.

L3 – Style

The author uses different turns of phrase, switches in person, different grammatical structures, humor, etc. to make their writing more interesting to read. Good style keeps the reader engaged. You know it when you see it as the ideas flow more easily into your mind. Really good style even evokes an emotion of its own. By contrast, an author can write clearly and explain well, but in a way that feels monotonous or even boring.

L4 – Evoking Emotion (Pathos in rhetorical theory)

I think this is the most advanced and also the most powerful particularly where it inspires the reader to take action based on your words through an emotional argument. To take an example, Martin Kleppmann’s turning the database inside out inspired a whole generation of software engineers to rethink how they build systems. Tim or Kris’ humor works in a different but equally effective way. Other appeals include establishing a connection with the reader, grounding in a subculture that the author and reader belong to, establishing credibility (ethos), highlighting where they are missing out on (FOMO), influencing through knowing and opinionated command of the content. There are many more.

The use of pathos (sadly) doesn’t always imply logos, often there are logical fallacies used even in technical writing. Writing is so much more powerful if both are used together.

Designing Event Driven Systems – Summary of Arguments

Thursday, October 4th, 2018

This post provides a terse summary of the high-level arguments addressed in my book.

Why Change is Needed

Technology has changed:

- Partitioned/Replayable logs provide previously unattainable levels of throughput (up to Terabit/s), storage (up to PB) and high availability.

- Stateful Stream Processors include a rich suite of utilities for handling Streams, Tables, Joins, Buffering of late events (important in asynchronous communication), state management. These tools interface directly with business logic. Transactions tie streams and state together efficiently.

- Kafka Streams and KSQL are DSLs which can be run as standalone clusters, or embedded into applications and services directly. The latter approach makes streaming an API, interfacing inbound and outbound streams directly into your code.

Businesses need asynchronicity:

- Businesses are a collection of people, teams and departments performing a wide range of functions, backed by technology. Teams need to work asynchronously with respect to one another to be efficient.

- Many business processes are inherently asynchronous, for example shipping a parcel from a warehouse to a user’s door.

- A business may start as a website, where the front end makes synchronous calls to backend services, but as it grows the web of synchronous calls tightly couple services together at runtime. Event-based methods reverse this, decoupling systems in time and allowing them to evolve independently of one another.

A message broker has notable benefits:

- It flips control of routing, so a sender does not know who receives a message, and there may be many different receivers (pub/sub). This makes the system pluggable, as the producer is decoupled from the potentially many consumers.

- Load and scalability become a concern of the broker, not the source system.

- There is no requirement for backpressure. The receiver defines their own flow control.

Systems still require Request Response

- Whilst many systems are built entirely-event driven, request-response protocols remain the best choice for many use cases. The rule of thumb is: use request-response for intra-system communication particularly queries or lookups (customers, shopping carts, DNS), use events for state changes and inter-system communication (changes to business facts that are needed beyond the scope of the originating system).

Data-on-the-outside is different:

- In service-based ecosystems the data that services share is very different to the data they keep inside their service boundary. Outside data is harder to change, but it has more value in a holistic sense.

- The events services share form a journal, or ‘Shared Narrative’, describing exactly how your business evolved over time.

Databases aren’t well shared:

- Databases have rich interfaces that couple them tightly with the programs that use them. This makes them useful tools for data manipulation and storage, but poor tools for data integration.

- Shared databases form a bottleneck (performance, operability, storage etc.).

Data Services are still “databases”:

- A database wrapped in a service interface still suffers from many of the issues seen with shared databases (The Integration Database Antipattern). Either it provides all the functionality you need (becoming a homegrown database) or it provides a mechanism for extracting that data and moving it (becoming a homegrown replayable log).

Data movement is inevitable as ecosystems grow.

- The core datasets of any large business end up being distributed to the majority of applications.

- Messaging moves data from a tightly coupled place (the originating service) to a loosely coupled place (the service that is using the data). Because this gives teams more freedom (operationally, data enrichment, processing), it tends to be where they eventually end up.

Why Event Streaming

Events should be 1st Class Entities:

- Events are two things: (a) a notification and (b) a state transfer. The former leads to stateless architectures, the latter to stateful architectures. Both are useful.

- Events become a Shared Narrative describing the evolution of the business over time: When used with a replayable log, service interactions create a journal that describes everything a business does, one event at a time. This journal is useful for audit, replay (event sourcing) and debugging inter-service issues.

- Event-Driven Architectures move data to wherever it is needed: Traditional services are about isolating functionality that can be called upon and reused. Event-Driven architectures are about moving data to code, be it a different process, geography, disconnected device etc. Companies need both. The larger and more complex a system gets, the more it needs to replicate state.

Messaging is the most decoupled form of communication:

- Coupling relates to a combination of (a) data, (b) function and (c) operability

- Businesses have core datasets: these provide a base level of unavoidable coupling.

- Messaging moves this data from a highly coupled source to a loosely coupled destination which gives destination services control.

A Replayable Log turns ‘Ephemeral Messaging’ into ‘Messaging that Remembers’:

- Replayable logs can hold large, “Canonical” datasets where anyone can access them.

- You don’t ‘query’ a log in the traditional sense. You extract the data and create a view, in a cache or database of your own, or you process it in flight. The replayable log provides a central reference. This pattern gives each service the “slack” they need to iterate and change, as well as fitting the ‘derived view’ to the problem they need to solve.

Replayable Logs work better at keeping datasets in sync across a company:

- Data that is copied around a company can be hard to keep in sync. The different copies have a tendency to slowly diverge over time. Use of messaging in industry has highlighted this.

- If messaging ‘remembers’, it’s easier to stay in sync. The back-catalogue of data—the source of truth–is readily available.

- Streaming encourages derived views to be frequently re-derived. This keeps them close to the data in the log.

Replayable logs lead to Polyglot Views:

- There is no one-size-fits-all in data technology.

- Logs let you have many different data technologies, or data representations, sourced from the same place.

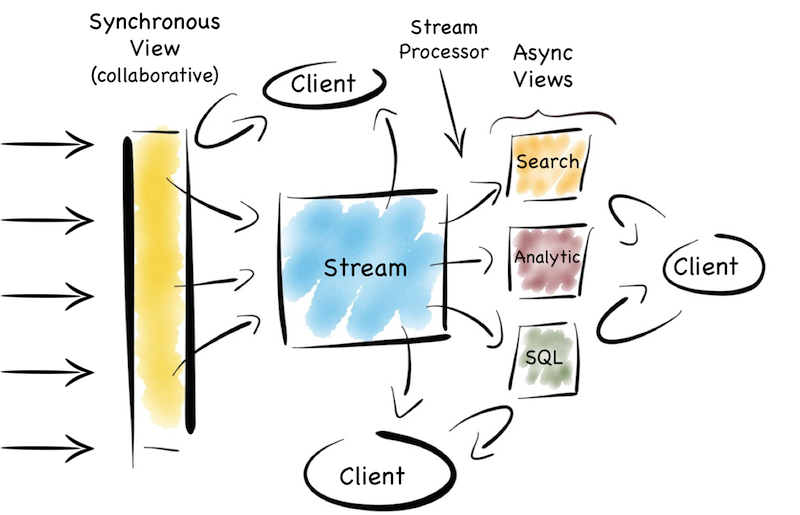

In Event-Driven Systems the Data Layer isn’t static

- In traditional applications the data layer is a database that is queried. In event-driven systems the data layer is a stream processor that prepares and coalesces data into a single event stream for ingest by a service or function.

- KSQL can be used as a data preparation layer that sits apart from the business functionality. KStreams can be used to embed the same functionality into a service.

- The streaming approach removes shared state (for example a database shared by different processes) allowing systems to scale without contention.

The ‘Database Inside Out’ analogy is useful when applied at cross-team or company scales:

- A streaming system can be thought of as a database turned inside out. A commit log and a a set of materialized views, caches and indexes created in different datastores or in the streaming system itself. This leads to two benefits.

- Data locality is used to increase performance: data is streamed to where it is needed, in a different application, a different geography, a different platform, etc.

- Data locality is used to increase autonomy: Each view can be controlled independently of the central log.

- At company scales this pattern works well because it carefully balances the need to centralize data (to keep it accurate), with the need to decentralise data access (to keep the organisation moving).

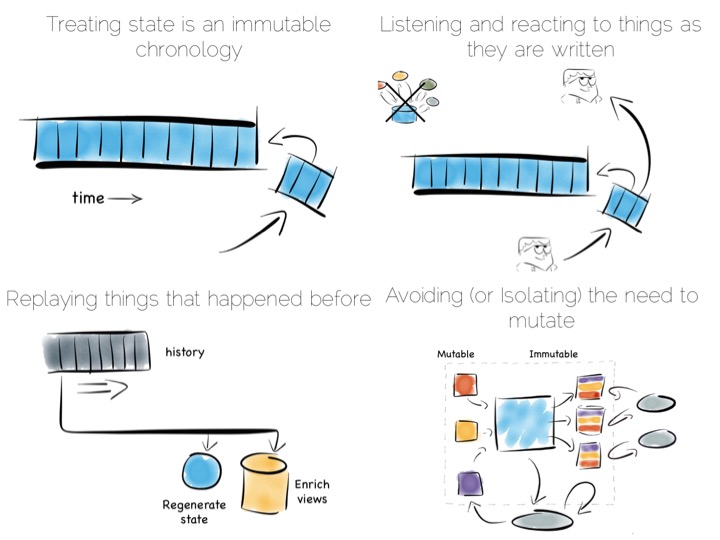

Streaming is a State of Mind:

- Databases, Request-response protocols and imperative programming lead us to think in blocking calls and command and control structures. Thinking of a business solely in this way is flawed.

- The streaming mindset starts by asking “what happens in the real world?” and “how does the real world evolve in time?” The business process is then modelled as a set of continuously computing functions driven by these real-world events.

- Request-response is about displaying information to users. Batch processing is about offline reporting. Streaming is about everything that happens in between.

The Streaming Way:

- Broadcast events

- Cache shared datasets in the log and make them discoverable.

- Let users manipulate event streams directly (e.g., with a streaming engine like KSQL)

- Drive simple microservices or FaaS, or create use-case-specific views in a database of your choice

The various points above lead to a set of broader principles that summarise the properties we expect in this type of system:

The WIRED Principles

Windowed: Reason accurately about an asynchronous world.

Immutable: Build on a replayable narrative of events.

Reactive: Be asynchronous, elastic & responsive.

Evolutionary: Decouple. Be pluggable. Use canonical event streams.

Data-Enabled: Move data to services and keep it in sync.

REST Request-Response Gateway

Thursday, June 7th, 2018

This post outlines how you might create a Request-Response Gateway in Kafka using the good old correlation ID trick and a shared response topic. It’s just a sketch. I haven’t tried it out.

A Rest Gateway provides an efficient Request-Response bridge to Kafka. This is in some ways a logical extension of the REST Proxy, wrapping the concepts of both a request and a response.

What problem does it solve?

- Allows you to contact a service, and get a response back, for example:

- to display the contents of the user’s shopping basket

- to validate and create a new order.

- Access many different services, with their implementation abstracted behind a topic name.

- Simple Restful interface removes the need for asynchronous programming front-side of the gateway.

So you may wonder: Why not simply expose a REST interface on a Service directly? The gateway lets you access many different services, and the topic abstraction provides a level of indirection in much the same way that service discovery does in a traditional request-response architecture. So backend services can be scaled out, instances taken down for maintenance etc, all behind the topic abstraction. In addition the Gateway can provide observability metrics etc in much the same way as a service mesh does.

You may also wonder: Do I really want to do request response in Kafka? For commands, which are typically business events that have a return value, there is a good argument for doing this in Kafka. The command is a business event and is typically something you want a record of. For queries it is different as there is little benefit to using a broker, there is no need for broadcast and there is no need for retention, so this offers little value over a point-to-point interface like a HTTP request. So the latter case we wouldn’t recommend this approach over say HTTP, but it is still useful for advocates who want a single transport and value that over the redundancy of using a broker for request response (and yes these people exist).

This pattern can be extended to be a sidecar rather than a gateway also (although the number of response topics could potentially become an issue in an architecture with many sidecars).

Implementation

Above we have a gateway running three instances, there are three services: Orders, Customer and Basket. Each service has a dedicated request topic that maps to that entity. There is a single response topic dedicated to the Gateway.

The gateway is configured to support different services, each taking 1 request topic and 1 response topic.

Imagine we POST and Order and expect confirmation back from the Orders service that it was saved. This work as follows:

- The HTTP request arrives at one node in the Gateway. It is assigned a correlation ID.

- The correlation ID is derived so that it hashes to a partition of the response topic owned by this gateway node (we need this to route the request back to the correct instance). Alternatively a random correlation id could be assigned and the request forwarded to the gateway node that owns the corresponding partition of the response topic.

- The request is tagged with a unique correlation ID and the name of the gateway response topic (each gateway has a dedicated response topic) then forwarded to the Orders Topic. The HTTP request is then parked in the webserver.

- The Orders Service processes the request and replies on the supplied response topic (i.e. the response topic of the REST Gateway), including the correlation ID as the key of the response message. When the REST Gateway receives the response, it extracts the correlation ID key and uses it to unblock the outstanding request so it responds to the user HTTP request.

Exactly the same process can be used for GET requests, although providing streaming GETs will require some form of batch markers or similar, which would be awkward for services to implement probably necessitating a client-side API.

If partitions move, whist requests are outstanding, they will timeout. We could work around this but it is likely acceptable for an initial version.

This is very similar to the way the OrdersService works in the Microservice Examples

Event-Driven Variant

When using an event driven architecture via event collaboration, responses aren’t based on a correlation id they are based on the event state, so for example we might submit orders, then respond once they are in a state of VALIDATED. The most common way to implement this is with CQRS.

Websocket Variant

Some users might prefer a websocket so that the response can trigger action rather than polling the gateway. Implementing a websocket interface is slightly more complex as you can’t use the queryable state API to redirect requests in the same way that you can with REST. There needs to be some table that maps (RequestId->Websocket(Client-Server)) which is used to ‘discover’ which node in the gateway has the websocket connection for some particular response.

Slides from Craft Meetup

Wednesday, May 9th, 2018

The slides for the Craft Meetup can be found here.

Book: Designing Event Driven Systems

Friday, April 27th, 2018

I wrote a book: Designing Event Driven Systems

MOBI (Kindle)

Building Event Driven Services with Kafka Streams (Kafka Summit Edition)

Monday, April 23rd, 2018

The Kafka Summit version of this talk is more practical and includes code examples which walk though how to build a streaming application with Kafka Streams.

Slides fo NDC – The Data Dichotomy

Friday, January 19th, 2018

Ben Stopford explains why understanding and accepting this dichotomy is an important part of designing service-based systems at any significant scale. Ben looks at how companies make use of a shared, immutable sequence of records to balance data that sits inside their services with data that is shared, an approach that allows the likes of Uber, Netflix, and LinkedIn to scale to millions of events per second.

Ben concludes by examining the potential of stream processors as a mechanism for joining significant, event-driven datasets across a whole host of services and explains why stream processing provides much of the benefits of data warehousing but without the same degree of centralization.

Handling GDPR: How to make Kafka Forget

Monday, December 4th, 2017

If you follow the press around Kafka you’ll probably know it’s pretty good at tracking and retaining messages, but sometimes removing messages is important too. GDPR is a good example of this as, amongst other things, it includes the right to be forgotten. This begs a very obvious question: how do you delete arbitrary data from Kafka? It’s an immutable log after all.

As it happens Kafka is a pretty good fit for GDPR as, along with the right to be forgotten, users also have the right to request a copy of their personal data. Companies are also required to keep detailed records of what data is used for — a requirement where recording and tracking the messages that move from application to application is a boon.

How do you delete (or redact) data from Kafka?

The simplest way to remove messages from Kafka is to simply let them expire. By default Kafka will keep data for two weeks and you can tune this as required. There is also an Admin API that lets you delete messages explicitly if they are older than some specified time or offset. But what if we are keeping data in the log for a longer period of time, say for Event Sourcing use cases or as a source of truth? For this you can make use of Compacted Topics, which allow messages to be explicitly deleted or replaced by key.

Data isn’t removed from Compacted Topics in the same way as say a relational database. Instead Kafka uses a mechanism closer to those used by Cassandra and HBase where records are marked for removal then later deleted when the compaction process runs.

To make use of this you configure the topic to be compacted and then send a delete event (by sending a null message, with the key of the message you want to delete). When compaction runs the message will be deleted forever.

//Create a record in a compacted topic in kafka producer.send(new ProducerRecord(CUSTOMERS_TOPIC, “Donald Trump”, “Job: Head of the Free World, Address: The White House”)); //Mark that record for deletion when compaction runs producer.send(new ProducerRecord(CUSTOMERS_TOPIC, “Donald Trump”, null));

If the key of the topic is something other than the CustomerId then you need some process to map the two. So for example if you have a topic of Orders, then you need a mapping of Customer->OrderId held somewhere. Then to ‘forget’ a customer simply lookup their Orders and either explicitly delete them, or alternatively redact any customer information they contain. You can do this in a KStreams job with a State Store or alternatively roll your own.

There is a more unusual case where the key (which Kafka uses for ordering) is completely different to the key you want to be able to delete by. Let’s say that, for some reason, you need to key your Orders by ProductId. This wouldn’t be fine-grained enough to let you delete Orders for individual customers so the simple method above wouldn’t work. You can still achieve this by using a key that is a composite of the two: [ProductId][CustomerId] then using a custom partitioner in the Producer (see the Producer Config: “partitioner.class”) which extracts the ProductId and uses only that subsection for partitioning. Then you can delete messages using the mechanism discussed earlier using the [ProductId][CustomerId] pair as the key.

What about the databases that I read data from or push data to?

Quite often you’ll be in a pipeline where Kafka is moving data from one database to another using Kafka Connectors. In this case you need to delete the record in the originating database and have that propagate through Kafka to any Connect Sinks you have downstream. If you’re using CDC this will just work: the delete will be picked up by the source Connector, propagated through Kafka and deleted in the sinks. If you’re not using a CDC enabled connector you’ll need some custom mechanism for managing deletes.

How long does Compaction take to delete a message?

By default compaction will run periodically and won’t give you a clear indication of when a message will be deleted. Fortunately you can tweak the settings for stricter guarantees. The best way to do this is to configure the compaction process to run continuously, then add a rate limit so that it doesn’t doesn’t affect the rest of the system unduly:

# Ensure compaction runs continuously with a very low cleanable ratio log.cleaner.min.cleanable.ratio = 0.00001 # Set a limit on compaction so there is bandwidth for regular activities log.cleaner.io.max.bytes.per.second=1000000

Setting the cleanable ratio to 0 would make compaction run continuously. A small, positive value is used here, so the cleaner doesn’t execute if there is nothing to clean, but will kick in quickly as soon as there is. A sensible value for the log cleaner max I/O is [max I/O of disk subsystem] x 0.1 / [number of compacted partitions]. So say this computes to 1MB/s then a topic of 100GB will clean removed entries within 28 hours. Obviously you can tune this value to get the desired guarantees.

One final consideration is that partitions in Kafka are made from a set of files, called segments, and the latest segment (the one being written to) isn’t considered for compaction. This means that a low throughput topic might accumulate messages in the latest segment for quite some time before rolling, and compaction kicking in. To address this we can force the segment to roll after a defined period of time. For example log.roll.hours=24 would force segments to roll every day if it hasn’t already met its size limit.

Tuning and Monitoring

There are a number of configurations for tuning the compactor (see properties log.cleaner.* in the docs) and the compaction process publishes JMX metrics regarding its progress. Finally you can actually set a topic to be both compacted and have an expiry (an undocumented feature) so data is never held longer than the expiry time.

In Summary

Kafka provides immutable topics where entries are expired after some configured time, compacted topics where messages with specific keys can be flagged for deletion and the ability to propagate deletes from database to database with CDC enabled Connectors.

What could academia or industry could do (short or long term) to promote more collaboration?

Saturday, October 14th, 2017

I did a little poll of friends and colleagues about this question. Here are some of the answers which I found quite thought provoking:

I’m a recovering academic from many years ago. I feel like I have some perspective on graduate/research departments in computer science, even though I am sure things have changed a little since I was in grad school.

One problem I saw is that a ton of the research done in Universities in computer science (outside areas like quantum computing, etc) lags behind industry. A lot of graduate students in Software Engineering worked on projects that capable companies had already solved or that a senior industry developer could solve in a few weeks.

I also see a lot of graduate student project where they end up “building a tool” except the tool ends up being something nobody would ever use.

Every single one of those kinds of projects destroys the credibility of academics with industry.

A victory for academics seems to be publication or assembling statistical evidence for an assertion. I get it but nobody in industry cares about those things. Nobody. Change your goalposts and align them with industry if you want to collaborate with industry.

I also think there is huge overlap between graduate student research and startups. Lets say I’m 24 years old, and I think I have an idea to change the world with technology. Instead of doing it at the University for a M.Sc I can just get some investment and build a startup (even without a business plan sometimes).

If academics want collaboration they need to be brutally honest with themselves and get more focused while facing where they sit today. The software being written inside Universities often sucks. The research often moves too slowly. Startups are the innovators. The kinds of evidence and assertions being “proven” in academia are mostly uninteresting. The outputs like publications are only read by other academics.

It might hurt but if you want credibility, cancel some of that crap. Work in the future, not in the past, understand your strengths and weaknesses and play to your strengths, change your goals to deliver outputs that are really consumable…

Its a lot to ask, so I don’t see any of that happening…

My company, engages quite a lot with academia, and even runs an Institute partly for this. The following is a bit of a brain-dump.

Within the institute we employ an academic-in-residence (Carlota Perez.) This is to explicitly support and sponsor work that we think is valuable and should be completed. In this case, to help her finish her second book. The institute also runs a fellowship programme. This is broadly defined to attract individuals with ideas and talent to offer them a network and opportunities, supported by a stipend. We explicitly define this quite broadly to allow people who may not want to start businesses to find value.

Obviously we’re interested in finding people who want to start businesses, but we keep that distinct from the fellowship to allow more far-reaching visions space to grow, at least a little. If fellows do want to found a business, and are capable of it, then we draw them into and support them in that.

We’re looking to participate more in academic-industry think-tanks, and other bodies. We individually connect to people in these bodies, and in academia, a lot in workshops we run. Mostly to generate ideas and explore spaces.

Finally, we read a lot of papers.

In our view, this is a start, but not enough. We are doing a little to sponsor the development of ideas within academia, via Carlota Perez, and we’re allowing people to start research projects in the fellowship. But we want to help with more execution and scale. We’ve tried to partner with some universities, but we find that they’re not commercially-focused enough to support us in raising the capital to actually execute with. They want to provide ideas, we provide execution, and capital appears by magic. We need a bit more than that.

I was affiliated with [Top UK University] for a time and here is my top-2 list of difficulties:

– IP: the university makes it really hard to separate the IP between work done during the collaboration vs work done in the day job (industry). The amount of paperwork is typical of a bureaucratic institution. Turn off for many people (why bother).

– IP again: this is slightly tangential to the original question and is more related to a different kind of industry-academia collaboration, one where the prof does a startup while in academia. [Top UK University] for example had a policy that 50% of the equity of the startup belonged to [Top UK University]. That number is huge. Prevents other VCs from investing in the startup. Guarantees that basically no one will do a serious startup. A more comparable number in leading US universities like Stanford is 2-5%. There were creative ways around that, but it was a grey area legally. Again, why would one bother going through the hoops. It’s easier to just not deal with academia at all.

My suggestion would be that industry and academia need to develop more understanding of, and respect for, each other’s needs and incentives. To put it bluntly, the career demands are very different: industry people need to ship products that customers care about, while academics need to publish papers in good venues. With those different incentives come different timelines for working (industry thinks about shipping quickly and long-term maintenance; academia thinks about big ideas for the future, but doesn’t care about the code once the paper is published), different prioritisation of aspects of the work (e.g. testing), etc. Of course those are over-simplified caricatures, but I hope you get the idea.

I don’t think one is better than the other — they are just different, and for a collaboration to be productive, I think there needs to be mutual understanding and empathy for these different needs. People who have only worked in one of the two may get frustrated with people from the other camp, feeling that they just “don’t get what’s important” (because indeed different things are important).

Caveat: I’m still affiliated with various academic advisory boards so am somewhat biased by the progress we’re making. A few personal comments / observations:

– Although academia has shifted slightly to focus more on “impact” not just papers.

– The points made about have always been particularly troublesome for working with [Top UK University] due to the[Top UK University] Innovations licensing arrangements but I think as that arrangement expires there’s recognition that companies can’t keep sinking massive grants into Universities unless they’re philanthropic without new creative commercial ways of working.

– Linked to the above two points one of the frustrations for industry is that a low TRL development that appears to be 80% of the commercial offer realised in a Uni can be achieved in 20% of the time but the other “20%” productisation to commercial fruition / TRL7 will be 800% of the industry partners production costs and associated time etc… This should be reflected in the engagement and IP position but isn’t really.

– Academia is only just recognising that it must adjust to collaborate or risk being out competed where “Quantum compute” or “fundamental battery tech”,etc ,etc research groups are appearing in bigger tech companies.

Caveat – my subjective view out of ignorance from the fringes: The EPSRC Industrial Strategy Challenge Fund and Prosperity Partnerships are a massive opportunity and yet the ISCF Waves that have appeared appear to have done so with limited industrial awareness, formal structure and engagement. So those that have been engaged have been at the table more likely through personal relationships, etc. So this needs more publicity and more formality… There also needs to be a clear understanding of Innovate UK, the Catapults’ and Research Councils’ roles.

I’m not sure I have a great answer to this but I think it’s an interesting question. In the distributed systems world academia plays an important role, but there is always a divide. Things that I think might be useful:

– Doing more to reach the audience in industry. The best example of this i’ve seen is https://blog.acolyer.org/.

– Partnering to study why things work well in practice rather than in theory. For example there is much the wider community can learn from the internal design decisions made by key open source components that run in the real world. So in my field the design decisions made building Kafka, Cassandra, Zookeeper, HBase could use further study which would be useful for the next iteration of technologies.

– Making it easier for industrial practitioners to play a role in academia. I know a few people that do this, but i’m not entirely sure how it works, but I feel it could be done more.

Finally some comments on twitter here: https://twitter.com/benstopford/status/917991118058459138

Delete Arbitrary Messages from a Kafka

Friday, October 6th, 2017

I’ve been asked a few times about how you can delete messages from a topic in Kafka. So for example, if you work for a company and you have a central Kafka instance, you might want to ensure that you can delete any arbitrary message due to say regulatory or data protection requirements or maybe simple in case something gets corrupted.

A potential trick to do this is to use a combination of (a) a compacted topic and (b) a custom partitioner (c) a pair of interceptors.

The process would follow:

- Use a producer interceptor to add a GUID to the end of the key before it is written.

- Use a custom partitioner to ignore the GUID for the purposes of partitioning

- Use a compacted topic so you can then delete any individual message you need via producer.send(key+GUID, null)

- Use a consumer interceptor to remove the GUID on read.

Two caveats: (1) Log compaction does not touch the most recent segment, so values will only be deleted once the first segment rolls. This essentially means it may take some time for the ‘delete’ to actually occur. (2) I haven’t tested this!

Slides Kafka Summit SF – Building Event-Driven Services with Stateful Streams

Monday, August 28th, 2017

Devoxx 2017 – Rethinking Services With Stateful Streams

Friday, May 12th, 2017

The Data Dichotomy

Wednesday, December 14th, 2016

A post about services and data, published on the Confluent site.

https://www.confluent.io/blog/data-dichotomy-rethinking-the-way-we-treat-data-and-services/

QCon Interview on Microservices and Stream Processing

Friday, February 19th, 2016

This is a transcript from an interview I did for QCon (delivered verbally):

QCon: What is your main focus right now at Confluent?

Ben: I work as an engineer in the Apache Kafka Core Team. I do some system architecture work too. At the moment, I am working on automatic data balancing within Kafka. Auto data balancing is basically expanding, contracting and balancing resources within the cluster as you add/remove machines or add some other kind of constraint or invariant. Basically, I’m working on making the cluster grow and shrink dynamically.

QCon: Is stream processing new?

Ben: Stream processing, as we know it, has really come from the background of batch analytics (around Hadoop) and that has kind of evolved into this stream processing thing as people needed to get things done faster.Although to be honest, stream processing has been around for 30 years in one form or another, but it has just always been quite niche. It’s only recently that it’s moved mainstream. That’s important because if you look at the stream processing technology from a decade ago, it was just a bit more specialist, less scalable, less available and less accessible (though, certainly not simple). Now that stream processing is more mainstream, it comes with a lot of quite powerful tooling and the ecosystem is just much bigger.

QCon: Why do you feel streaming data is an important consideration for Microservice architectures?

Ben: So you don’t see people talking about stream processing and Microservices together all that much. This is largely because they came from different places. But stream processing turns out to be pretty interesting from the Microservice perspective because there’s a bunch of overlap in the problems they need to solve as data scales out and business workflows cross service and data boundaries.

As you move from a monolithic application to a set of distributed services, you end up with much more complicated systems to plan and build (whether you like it or not). People typically have ReST for Request/Response, but most of the projects we see have moved their fact distribution to some sort of brokered approach, meaning they end up with some combination of request/response and event-based processing. So if ReST is at one side of the spectrum, then Kafka is at the other and the two end up being pretty complimentary. But there is actually a cool interplay between these two when you start thinking about it. Synchronous communication works well for a bunch of use cases, particularly GUIs or external services that are inherently RPC. Event-driven methods tend to work better for business processes, particularly as they get larger and more complex. This leads to patterns that end up looking a lot like event-driven architectures.

So when we actually build these things a bunch of problems pop up because no longer do we have a single shared database. We have no global bag of state in the sky to lean on. Sure, we have all the benefits of bounded contexts, nicely decoupled from the teams around them and this is pretty great for the most part. Database couplings have always been a bit troublesome and hard to manage. But now we hit all the pains of a distributed system and this means we end up having to be really careful about how we sew data together so we don’t screw it up along the way.

Relying on a persistent, distributed log helps with some of these problems. You can blend the good parts of shared statefulness and reliability without the tight centralised couplings that come with a shared database. That’s actually pretty useful from a microservices perspective because you can lean on the backing layer for a bunch of stuff around durability, availability, recovery, concurrent processing and the like.

But it isn’t just durability and history that helps. Services end up having to tackle a whole bunch of other problems that share similarities with stream processing systems. Scaling out, providing redundancy at a service level, dealing with sources that can come and go, where data may arrive on time or may be held up. Combining data from a bunch of different places. Quite often this ends up being solved by pushing everything into a database, inside the service, and querying it there, but that comes with a bunch of problems in its own right.

So a lot of the functions you end up building to do processing in these services, overlap with what stream processing engines do: join tables and streams from different places, create views that match your own domain model. Filter, aggregate, window these things further. Put this alongside a highly available distributed log and you start to get a pretty compelling toolset for building services that scale simply and efficiently.

QCon: What’s the core message of your talk?

Ben: So the core message is pretty simple. There’s a bunch of stuff going on over there, there’s a bunch of stuff going on over here. Some people are mashing this stuff together and some pretty interesting things are popping out. It’s about bringing these parts of industry together. So utilizing a distributed log as a backbone has some pretty cool side effects. Add a bit of stream processing into the mix and it all gets a little more interesting still.

So say you’re replacing a mainframe with a distributed service architecture, running on the cloud, you actually end up hitting a bunch of the same problems you hit in the analytic space as you try to get away from the Lambda Architecture.

The talk dives into some of these problems and tries to spell out a different way of approaching them from a services perspective, but using a stream processing toolset. Interacting with external sources, slicing and dicing data, dealing with windowing, dealing with exactly once processing, and not just from the point of view of web logs or social data. We’ll be thinking business systems, payment processing and the like.

The Benefits of “In-Memory” Data are Often Overstated

Sunday, January 3rd, 2016

There is an intuition we all share that memory is king. It’s fast, sexy and blows the socks off old school spinning disks or even new school SSDs. At it’s heart this is of course true. But when it comes to in-memory data technologies, alas it’s a lie. At least on balance.

The simple truth is this. The benefit of an in memory “database” (be it a db, distributed cache, data grid or something else) is two fold.

(1) It doesn’t have to use a data-structure that is optimised for disk. What that really means is you don’t need to read in pages like a database does, and you don’t have to serialise/deserialise between the database and your program. You just use whatever you domain model is in memory.

(2) You can load data into an in-memory database faster. The fastest disk backed databases top out bulk loads at around 100MB/s (~GbE network).

That’s it.There is nothing else. Reads will be no faster. There are many other downsides.

Don’t believe me? read on.

Lets consider why we use disk at all. To gain a degree of fault tolerance is common. We want to be able to pull the plug without fear of losing data. But if we have the data safely held elsewhere this isn’t such a big deal.

Disk is also useful for providing extra storage. Allowing us to ‘overflow’ our available memory. This can become painful, if we take the concept too far. The sluggish performance of an overladed PC that’s constantly paging memory to and from disk in an intuitive example, but this approach actually proves to be very successful in many data technologies, when the commonly used dataset fits largely in memory.

The operating system’s page cache is a key ingredient here. It’ll happily gobble up any available RAM, making many disk-backed solutions perform similarly to in-memory ones when there’s enough memory to play with. This applies to both reads and writes too, assuming the OS is left to page data to disk in its own time.

The operating system’s page cache is a key ingredient here. It’ll happily gobble up any available RAM, making many disk-backed solutions perform similarly to in-memory ones when there’s enough memory to play with. This applies to both reads and writes too, assuming the OS is left to page data to disk in its own time.

So this means the two approaches often perform similarly. Say we have two 128GB machines. On one we install an in-memory database. On the other we install a similar disk-backed database. We put 100GB of data into each of them. The disk-backed database will be reading data from memory most of the time. But it’ll also let you overflow beyond 128GB, pushing infrequently used data (which is common in most systems) onto disk so it doesn’t clutter the address space.

Now the tradeoff is a little subtler in reality. An in-memory database can guarantee comparatively fast random access. This gives good breadth for optimisation. On the other hand, the disk-backed database must use data structures optimised for the sequential approaches that magnetic (and to a slightly lesser extent SSD) based media require for good performance, even if the data is actually being served from memory.

So if the storage engine is something like a LSM tree there will be an associated overhead that the in-memory solution would not need to endure. This is undoubtedly significant, but we are still left wondering whether the benefit of this optimisation is really worth the downsides a of pure, in-memory solution.

Another subtlety relates to something we mentioned earlier. We may use disk for fault tolerance. A typical disk-backed database, like Postgres or Cassandra, uses disk in two different ways. The storage engine will use a file structure that is read-optimised in some way. In most cases an additional structure is used, generally termed a Write Ahead Log. This provides a fast way for logging data to a persistent media so the database can reply to clients in the knowledge that data is safe.

Now some in-memory databases neglect durability completely. Others provide durability through replication (a second replica exists on another machine using some clustering protocol). This later pattern has much value as it increases availability in failure scenarios. But this concern is really orthogonal. If you need a write ahead log use one, or use replicas. Whether your dataset is pinned entirely in memory, or can overflow to disk, is a separate concern.

A different reason to turn to a purely in-memory solution is to host a database in-process (i.e. in the program you are querying from). In this case the performance gain comes largely from the shared address space, lack of network IO, and maybe a lack of de/serialisation etc. This is valuable for applications which make use of local data processing. But all the arguments above still apply and disk overflow is again, often sensible.

So the key point is really that having disk around, as something to overflow into, is well worth the marginal tradeoff in performance. This is particularly true from an operational perspective. There is no hard ceiling, which means you can run closer to the limit without fear of failure. This makes disk-backed solution cheaper and less painful to run. The overall cost of write amplification (the additional storage overhead associated with each record) is often underestimated** meaning we often hit the memory wall sooner than we’d like. Moreover the reality of most projects is that a small fraction of the data held is used frequently, so paying the price of holding that in RAM can become a burden as datasets grow… and datasets always grow!

There is also reason to urge caution though. The disk-is-slow intuition is absolutely correct. Push your disk-backed dataset to the point where the disk is being used for frequent random access and performance is going to end up falling off a very steep cliff. The point is simply that, for many use cases, there’s likely more wiggle room than you may think.

So memory optimised is good. Memory optimised is fast. But the downsides of the hard limit imposed by pure in-memory solutions is often not worth the operational burden, especially when disk backed solutions, provided ample memory to use for caching, perform equally well for all but the most specialised, data intensive use cases.

** When I worked with distributed caches a write amplification of x6 was typical in real world systems. This was made from a number of factors: Primary and replica copies, JVM overhead, data skew across the cluster, overhead of Java objects representations, indexes.

Elements of Scale: Composing and Scaling Data Platforms

Tuesday, April 28th, 2015

This post is the transcript from a talk, of the same name, given at Progscon & JAX Finance 2015.

There is a video also.

As software engineers we are inevitably affected by the tools we surround ourselves with. Languages, frameworks, even processes all act to shape the software we build.

Likewise databases, which have trodden a very specific path, inevitably affect the way we treat mutability and share state in our applications.

Over the last decade we’ve explored what the world might look like had we taken a different path. Small open source projects try out different ideas. These grow. They are composed with others. The platforms that result utilise suites of tools, with each component often leveraging some fundamental hardware or systemic efficiency. The result, platforms that solve problems too unwieldy or too specific to work within any single tool.

So today’s data platforms range greatly in complexity. From simple caching layers or polyglotic persistence right through to wholly integrated data pipelines. There are many paths. They go to many different places. In some of these places at least, nice things are found.

So the aim for this talk is to explain how and why some of these popular approaches work. We’ll do this by first considering the building blocks from which they are composed. These are the intuitions we’ll need to pull together the bigger stuff later on.

In a somewhat abstract sense, when we’re dealing with data, we’re really just arranging locality. Locality to the CPU. Locality to the other data we need. Accessing data sequentially is an important component of this. Computers are just good at sequential operations. Sequential operations can be predicted.

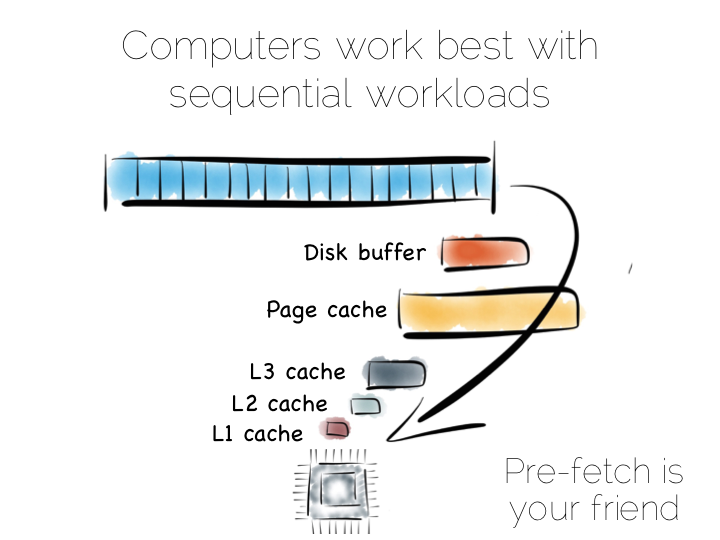

If you’re taking data from disk sequentially it’ll be pre-fetched into the disk buffer, the page cache and the different levels of CPU caching. This has a significant effect on performance. But it does little to help the addressing of data at random, be it in main memory, on disk or over the network. In fact pre-fetching actually hinders random workloads as the various caches and frontside bus fill with data which is unlikely to be used.

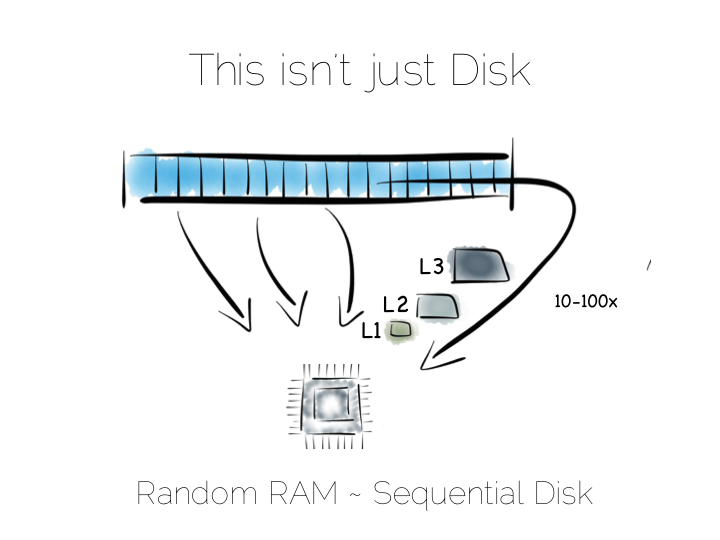

So whilst disk is somewhat renowned for its slow performance, main memory is often assumed to simply be fast. This is not as ubiquitously true as people often think. There are one to two orders of magnitude between random and sequential main memory workloads. Use a language that manages memory for you and things generally get a whole lot worse.

Streaming data sequentially from disk can actually outperform randomly addressed main memory. So disk may not always be quite the tortoise we think it is, at least not if we can arrange sequential access. SSD’s, particularly those that utilise PCIe, further complicate the picture as they demonstrate different tradeoffs, but the caching benefits of the two access patterns remain, regardless.

So lets imagine, as a simple thought experiment, that we want to create a very simple database. We’ll start with the basics: a file.

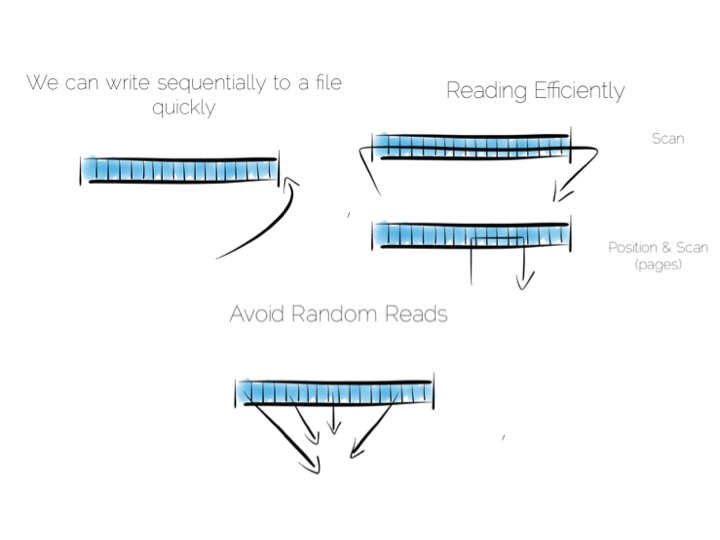

We want to keep writes and reads sequential, as it works well with the hardware. We can append writes to the end of the file efficiently. We can read by scanning the the file in its entirety. Any processing we wish to do can happen as the data streams through the CPU. We might filter, aggregate or even do something more complex. The world is our oyster!

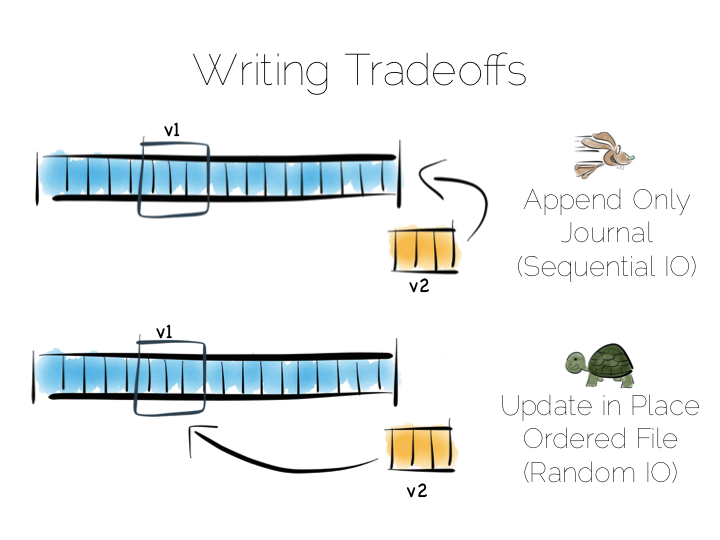

So what about data that changes, updates etc?

We have a couple of options. We could update the value in place. We’d need to use fixed width fields for this, but that’s ok for our little thought experiment. But update in place would mean random IO. We know that’s not good for performance.

Alternatively we could just append updates to the end of the file and deal with the superseded values when we read it back.

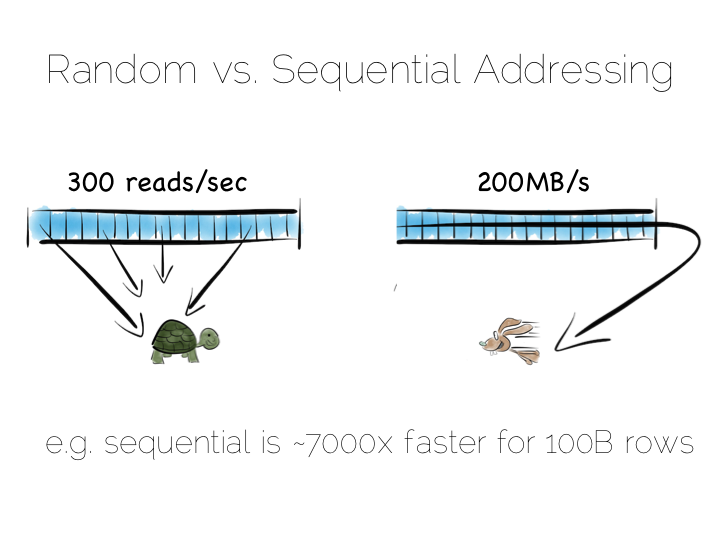

So we have our first tradeoff. Append to a ‘journal’ or ‘log’, and reap the benefits of sequential access. Alternatively if we use update in place we’ll be back to 300 or so writes per second, assuming we actually flush through to the underlying media.

Now in practice of course reading the file, in its entirety, can be pretty slow. We’ll only need to get into GB’s of data and the fastest disks will take seconds. This is what a database does when it ends up table scanning.

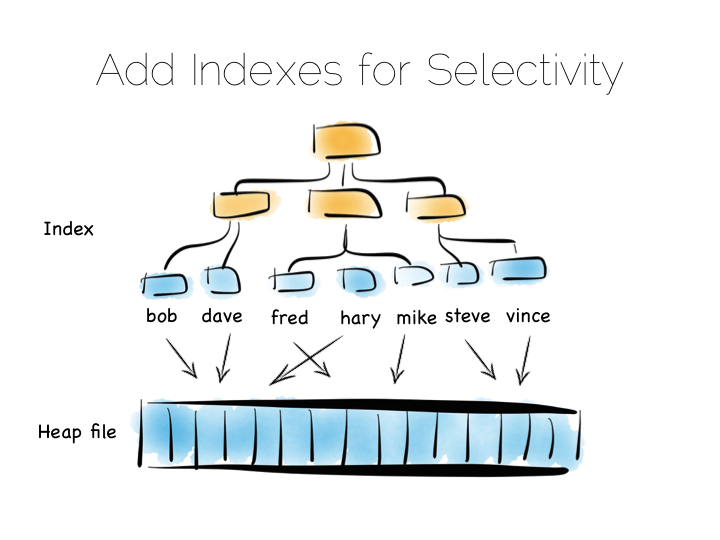

Also we often want something more specific, say customers named “bob”, so scanning the whole file would be overkill. We need an index.

Now there are lots of different types of indexes we could use. The simplest would be an ordered array of fixed-width values, in this case customer names, held with the corresponding offsets in the heap file. The ordered array could be searched with binary search. We could also of course use some form of tree, bitmap index, hash index, term index etc. Here we’re picturing a tree.

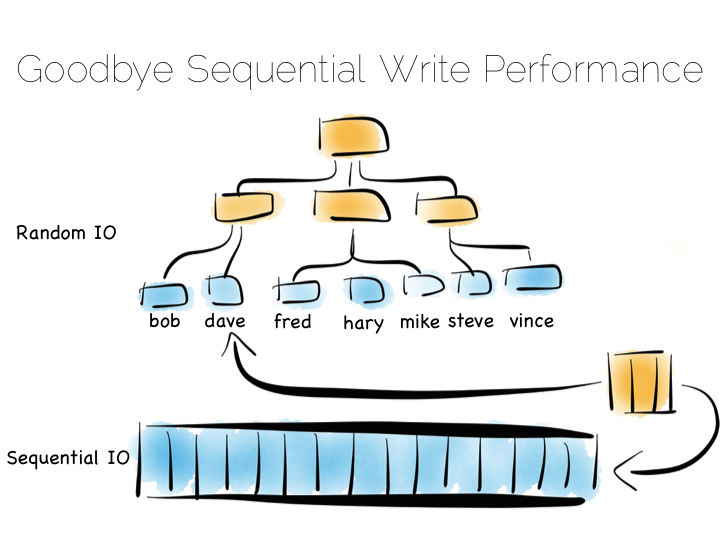

The thing with indexes like this is that they impose an overarching structure. The values are deliberately ordered so we can access them quickly when we want to do a read. The problem with the overarching structure is that it necessitates random writes as data flows in. So our wonderful, write optimised, append only file must be augmented by writes that scatter-gun the filesystem. This is going to slow us down.

Anyone who has put lots of indexes on a database table will be familiar with this problem. If we are using a regular rotating hard drive, we might run 1,000s of times slower if we maintain disk integrity of an index in this way.

Luckily there are a few ways around this problem. Here we are going to discuss three. These represent three extremes, and they are in truth simplifications of the real world, but the concepts are useful when we consider larger compositions.

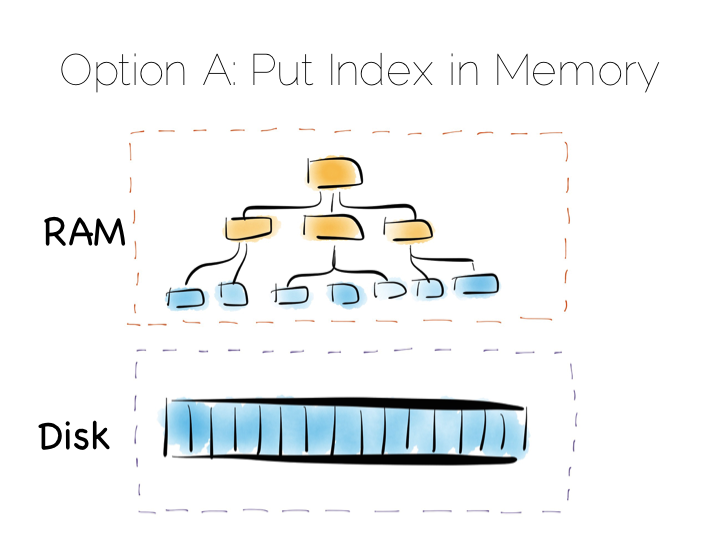

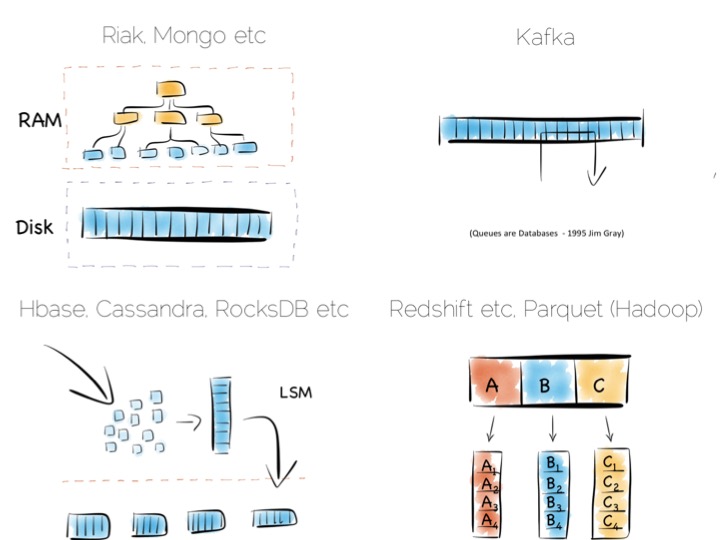

Our first option is simply to place the index in main memory. This will compartmentalise the problem of random writes to RAM. The heap file stays on disk.

This is a simple and effective solution to our random writes problem. It is also one used by many real databases. MongoDB, Cassandra, Riak and many others use this type of optimisation. Often memory mapped files are used.

However, this strategy breaks down if we have far more data than we have main memory. This is particularly noticeable where there are lots of small objects. Our index would get very large. Thus our storage becomes bounded by the amount of main memory we have available. For many tasks this is fine, but if we have very large quantities of data this can be a burden.

A popular solution is to move away from having a single ‘overarching’ index. Instead we use a collection of smaller ones.

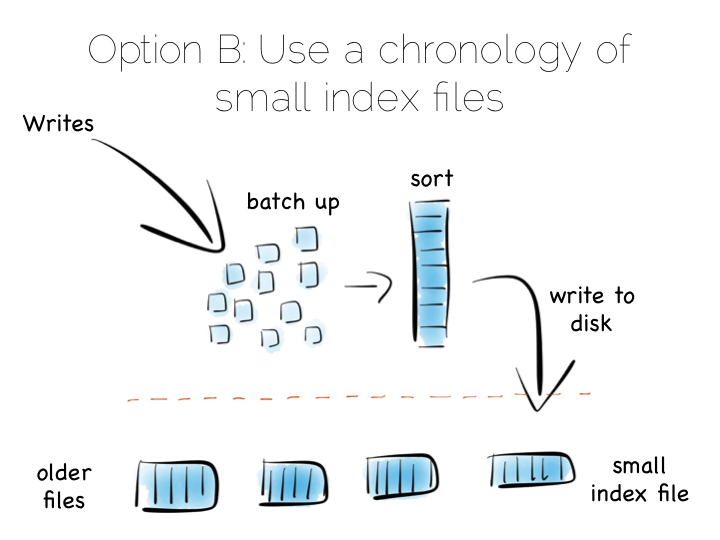

This is a simple idea. We batch up writes in main memory, as they come in. Once we have sufficient – say a few MB’s – we sort them and write them to disk as an individual mini-index. What we end up with is a chronology of small, immutable index files.

So what was the point of doing that? Our set of immutable files can be streamed sequentially. This brings us back to a world of fast writes, without us needing to keep the whole index in memory. Nice!

Of course there is a downside to this approach too. When we read, we have to consult the many small indexes individually. So all we have really done is shift the problem of RandomIO from writes onto reads. However this turns out to be a pretty good tradeoff in many cases. It’s easier to optimise random reads than it is to optimise random writes.

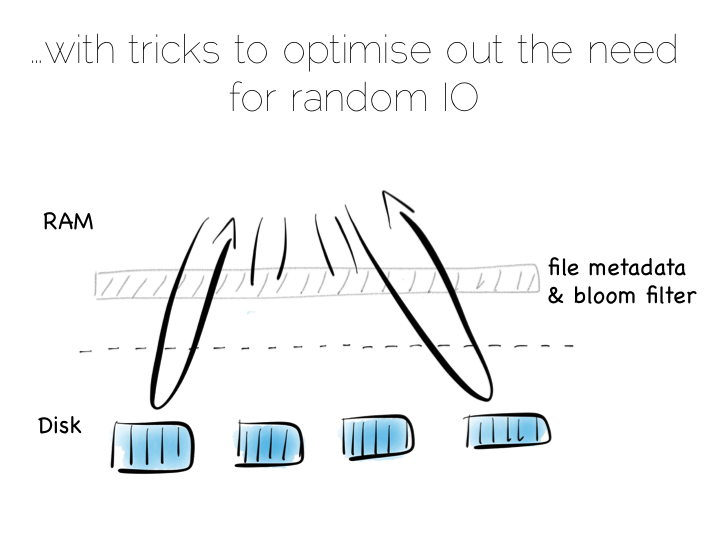

Keeping a small meta-index in memory or using a Bloom Filter provides a low-memory way of evaluating whether individual index files need to be consulted during a read operation. This gives us almost the same read performance as we’d get with a single overarching index whilst retaining fast, sequential writes.

In reality we will need to purge orphaned updates occasionally too, but that can be done with nice sequential reads and writes.

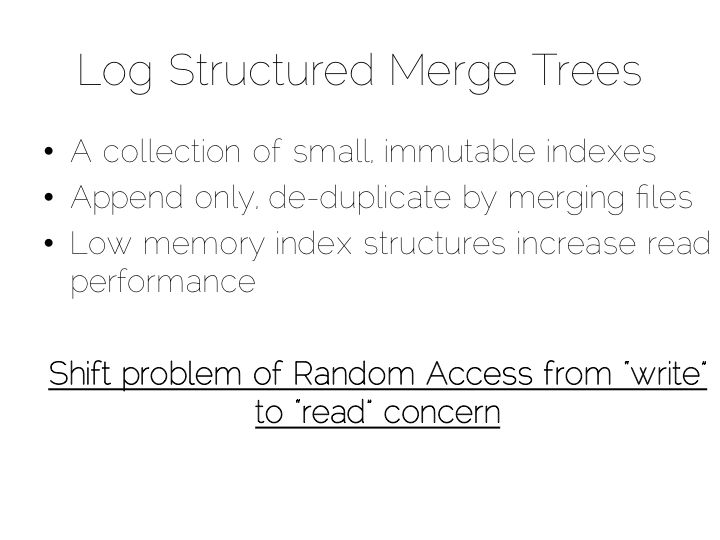

What we have created is termed a Log Structured Merge Tree. A storage approach used in a lot of big data tools such as HBase, Cassandra, Google’s BigTable and many others. It balances write and read performance with comparatively small memory overhead.

So we can get around the ‘random-write penalty’ by storing our indexes in memory or, alternatively, using a write-optimised index structure like LSM. There is a third approach though. Pure brute force.

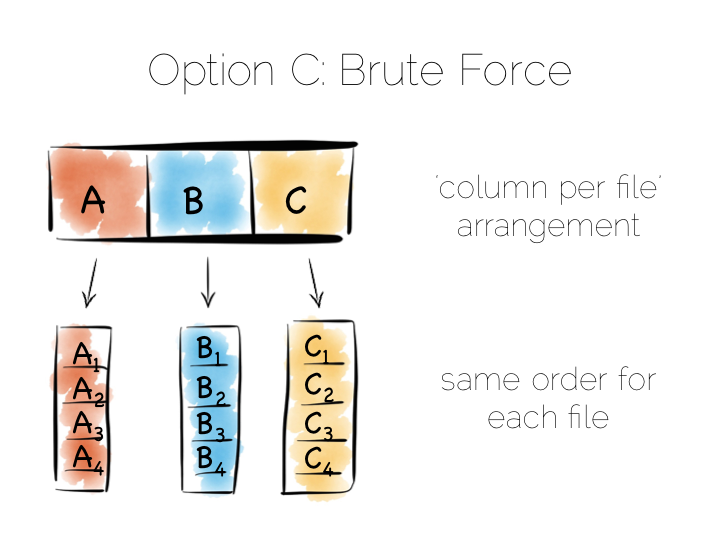

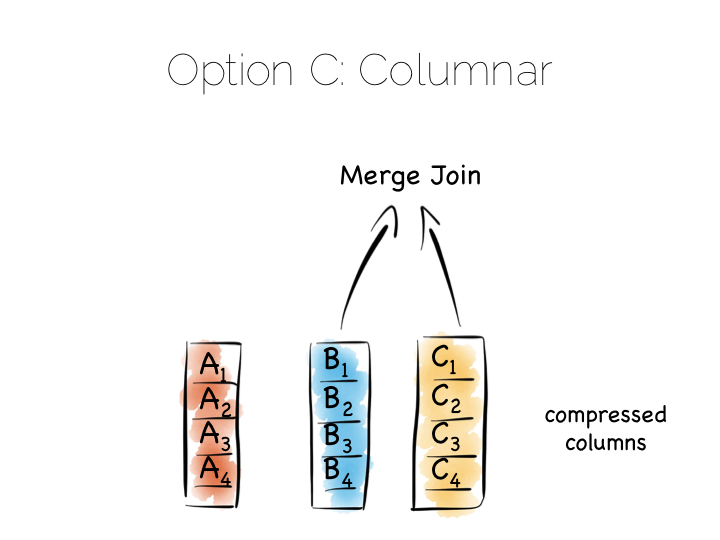

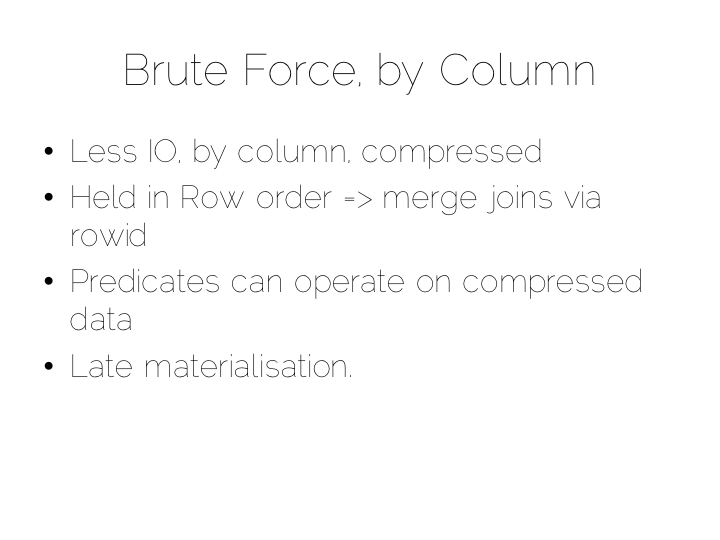

Think back to our original example of the file. We could read it in its entirety. This gave us many options in terms of how we go about processing the data within it. The brute force approach is simply to hold data by column rather than by row. This approach is termed Columnar or Column Oriented.

(It should be noted that there is an unfortunate nomenclature clash between true column stores and those that follow the Big Table pattern. Whilst they share some similarities, in practice they are quite different. It is wise to consider them as different things.)

Column Orientation is another simple idea. Instead of storing data as a set of rows, appended to a single file, we split each row by column. We then store each column in a separate file. When we read we only read the columns we need.

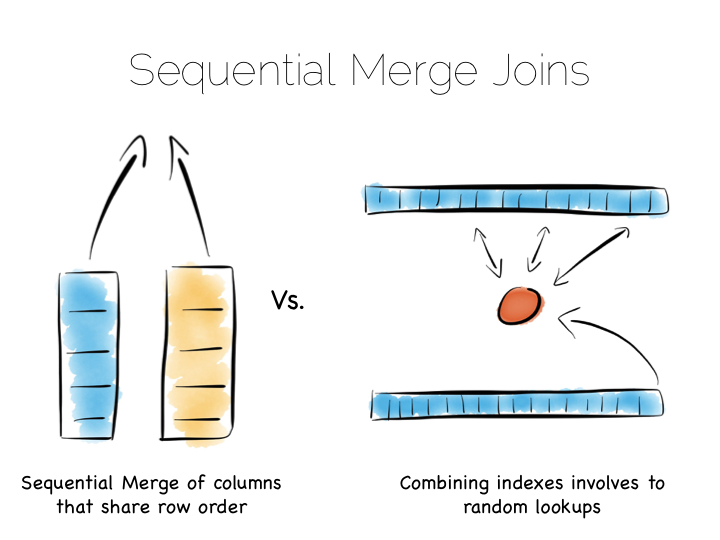

We keep the order of the files the same, so row N has the same position (offset) in each column file. This is important because we will need to read multiple columns to service a single query, all at the same time. This means ‘joining’ columns on the fly. If the columns are in the same order we can do this in a tight loop which is very cache- and cpu-efficient. Many implementations make heavy use of vectorisation to further optimise throughput for simple join and filter operations.

Writes can leverage the benefits of being append-only. The downside is that we now have many files to update, one for every column in every individual write to the database. The most common solution to this is to batch writes in a similar way to the one used in the LSM approach above. Many columnar databases also impose an overall order to the table as a whole to increase their read performance for one chosen key.

By splitting data by column we significantly reduce the amount of data that needs to be brought from disk, so long as our query operates on a subset of all columns.

In addition to this, data in a single column generally compresses well. We can take advantage of the data type of the column to do this, if we have knowledge of it. This means we can often use efficient, low cost encodings such as run-length, delta, bit-packed etc. For some encodings predicates can be used directly on the compressed stream too.

The result is a brute force approach that will work particularly well for operations that require large scans. Aggregate functions like average, max, min, group by etc are typical of this.

This is very different to using the ‘heap file & index’ approach we covered earlier. A good way to understand this is to ask yourself: what is the difference between a columnar approach like this vs a ‘heap & index’ where indexes are added to every field?

The answer to this lies in the ordering of the index files. BTrees etc will be ordered by the fields they index. Joining the data in two indexes involves a streaming operation on one side, but on the other side the index lookups have to read random positions in the second index. This is generally less efficient than joining two indexes (columns) that retain the same ordering. Again we’re leveraging sequential access.

So many of the best technologies which we may want to use as components in a data platform will leverage one of these core efficiencies to excel for a certain set of workloads.

Storing indexes in memory, over a heap file, is favoured by many NoSQL stores such as Riak, Couchbase or MongoDB as well as some relational databases. It’s a simple model that works well.

Tools designed to work with larger data sets tend to take the LSM approach. This gives them fast ingestion as well as good read performance using disk based structures. HBase, Cassandra, RocksDB, LevelDB and even Mongo now support this approach.

Column-per-file engines are used heavily in MPP databases like Redshift or Vertica as well as in the Hadoop stack using Parquet. These are engines for data crunching problems that require large traversals. Aggregation is the home ground for these tools.

Other products like Kafka apply the use of a simple, hardware efficient contract to messaging. Messaging, at its simplest, is just appending to a file, or reading from a predefined offset. You read messages from an offset. You go away. You come back. You read from the offset you previously finished at. All nice sequential IO.

This is different to most message oriented middleware. Specifications like JMS and AMQP require the addition of indexes like the ones discussed above, to manage selectors and session information. This means they often end up performing more like a database than a file. Jim Gray made this point famously back in his 1995 publication Queue’s are Databases.

So all these approaches favour one tradeoff or other, often keeping things simple, and hardware sympathetic, as a means of scaling.

So we’ve covered some of the core approaches to storage engines. In truth we made some simplifications. The real world is a little more complex. But the concepts are useful nonetheless.

Scaling a data platform is more than just storage engines though. We need to consider parallelism.

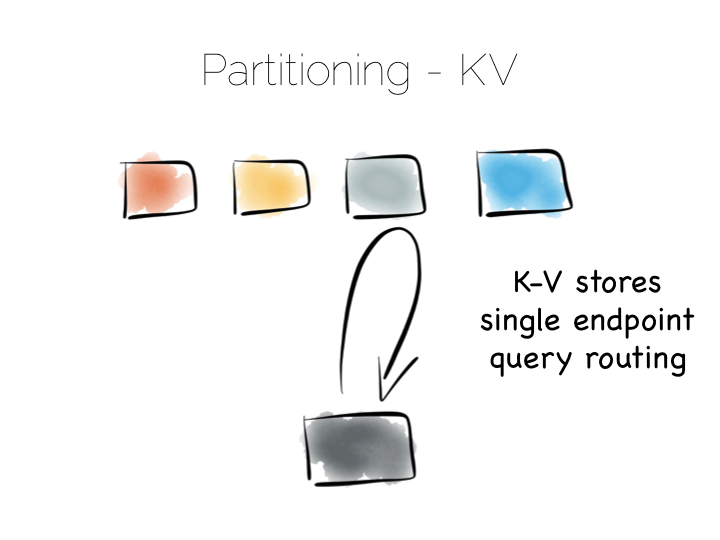

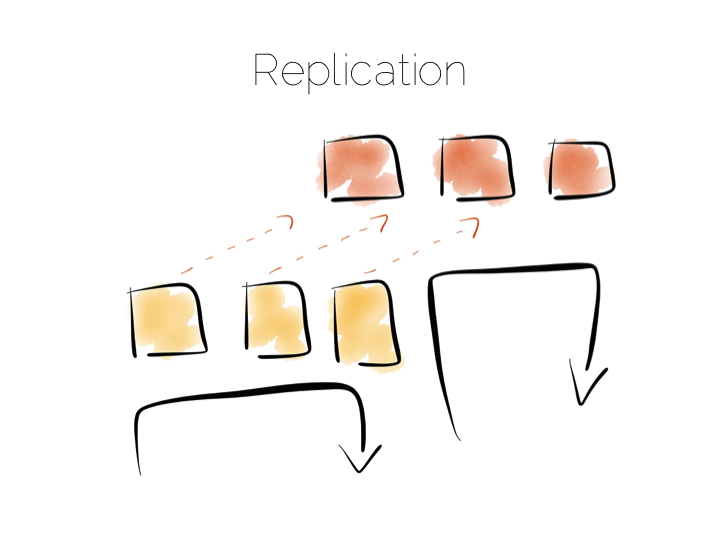

When distributing data over many machines we have two core primitives to play with: partitioning and replication. Partitioning, sometimes called sharding, works well both for random access and brute force workloads.

If a hash-based partitioning model is used the data will be spread across a number of machines using a well-known hash function. This is similar to the way a hash table works, with each bucket being held on a different machine.

The result is that any value can be read by going directly to the machine that contains the data, via the hash function. This pattern is wonderfully scalable and is the only pattern that shows linear scalability as the number of client requests increases. Requests are isolated to a single machine. Each one will be served by just a single machine in the cluster.

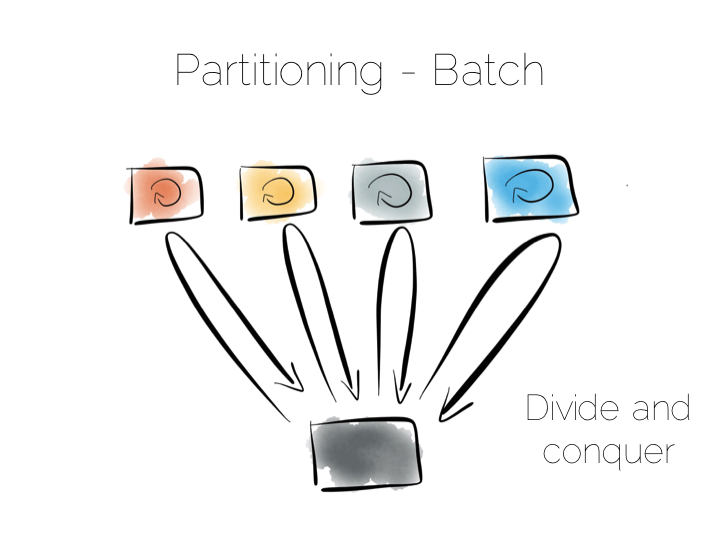

We can also use partitioning to provide parallelism over batch computations, for example aggregate functions or more complex algorithms such as those we might use for clustering or machine learning. The key difference is that we exercise all machines at the same time, in a broadcast manner. This allows us to solve a large computational problem in a much shorter time, using a divide and conquer approach.

Batch systems work well for large problems, but provide little concurrency as they tend to exhaust the resources on the cluster when they execute.

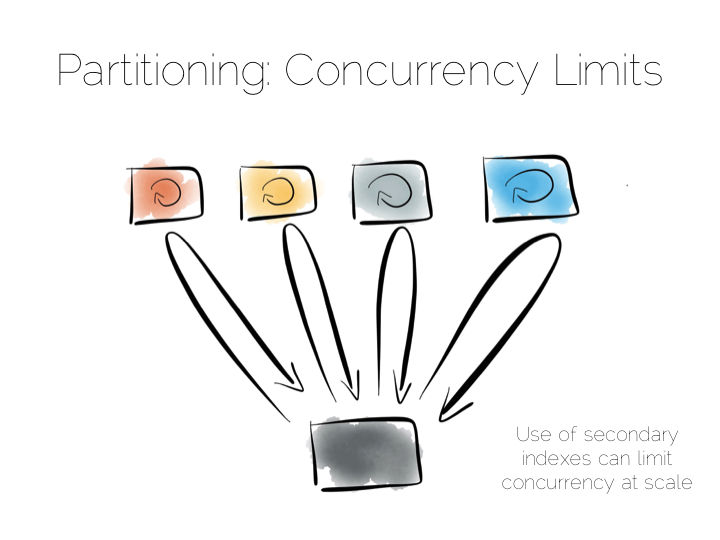

So the two extremes are pretty simple: Directed access at one end. Broadcast, divide and conquer at the other. Where we need to be careful is in the middle ground that lies between the two. A good example of this is the use of secondary indexes in NoSQL stores that span many machines.

A secondary index is an index that isn’t on the primary key. This means the data will not be partitioned by the values in the index. Directed routing via a hash function is no longer an option. We have to broadcast requests to all machines. This limits concurrency. Every node must be involved in every query.

For this reason many key value stores have resisted the temptation to add secondary indexes, despite their obvious use. HBase and Voldemort are examples of this. But many others do expose them, MongoDB, Cassandra, Riak etc. This is good as secondary indexes are useful. But it’s important to understand the effect they will have on the overall concurrency of the system.

The route out of this concurrency bottleneck is replication. You’ll probably be familiar with replication either from using async slave databases or from replicated NoSQL stores like Mongo or Cassandra.

In practice replicas can be invisible (used only for recovery), read only (adding concurrency) or read-write (adding availability under network partitions). Which of these you choose will trade off against the consistency of the system. This is simply the application of CAP theorem (although cap theorem also may not be as simple as you think).

This tradeoff with consistency* brings us to an important question. When does consistency matter?

Consistency is expensive. In the database world ACID is guaranteed by linearisabilty. This is essentially ensuring that all operations appear to occur in sequential order. It turns out to be a pretty expensive thing. In fact it’s prohibitive enough that many databases don’t offer it as an isolation level at all. Those that do, rarely set it as the default.

Suffice to say that if you apply strong consistency to a system that does distributed writes you’ll likely end up in tortoise territory.

(* note the term consistency has two common usages. The C in ACID and the C in CAP. They are unfortunately not the same. I’m using the CAP definition: all nodes see the same data at the same time)

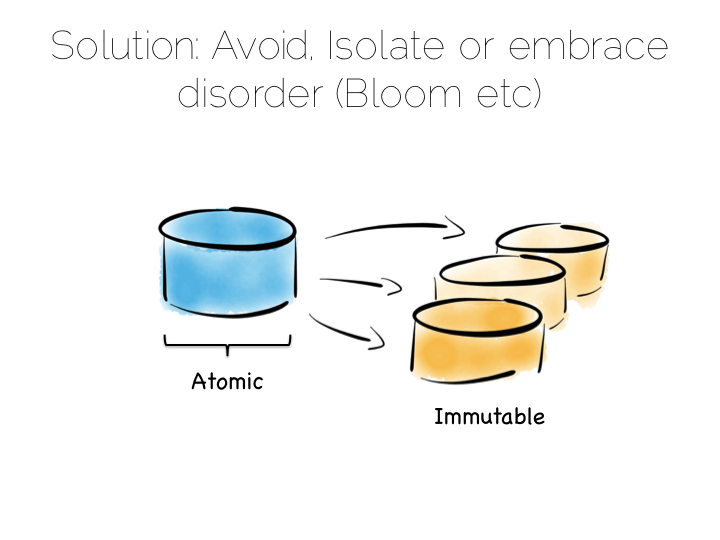

The solution to this consistency problem is simple. Avoid it. If you can’t avoid it isolate it to as few writers and as few machines as possible.

Avoiding consistency issues is often quite easy, particularly if your data is an immutable stream of facts. A set of web logs is a good example. They have no consistency concerns as they are just facts that never change.

There are other use cases which do necessitate consistency though. Transferring money between accounts is an oft used example. Non-commutative actions such as applying discount codes is another.

But often things that appear to need consistency, in a traditional sense, may not. For example if an action can be changed from a mutation to a new set of associated facts we can avoid mutable state. Consider marking a transaction as being potentially fraudulent. We could update it directly with the new field. Alternatively we could simply use a separate stream of facts that links back to the original transaction.

So in a data platform it’s useful to either remove the consistency requirement altogether, or at least isolate it. One way to isolate is to use the single writer principal, this gets you some of the way. Datomic is a good example of this. Another is to physically isolate the consistency requirement by splitting mutable and immutable worlds.

Approaches like Bloom/CALM extend this idea further by embracing the concept of disorder by default, imposing order only when necessary.

So those were some of the fundamental tradeoffs we need to consider. Now how to we pull these things together to build a data platform?

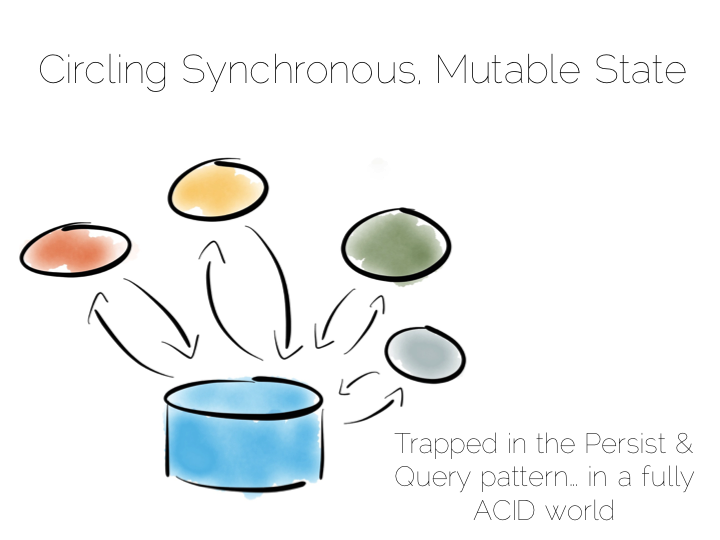

A typical application architecture might look something like the below. We have a set of processes which write data to a database and read it back again. This is fine for many simple workloads. Many successful applications have been built with this pattern. But we know it works less well as throughput grows. In the application space this is a problem we might tackle with message-passing, actors, load balancing etc.

The other problem is this approach treats the database as a black box. Databases are clever software. They provide a huge wealth of features. But they provide few mechanisms for scaling out of an ACID world. This is a good thing in many ways. We default to safety. But it can become an annoyance when scaling is inhibited by general guarantees which may be overkill for the requirements we have.

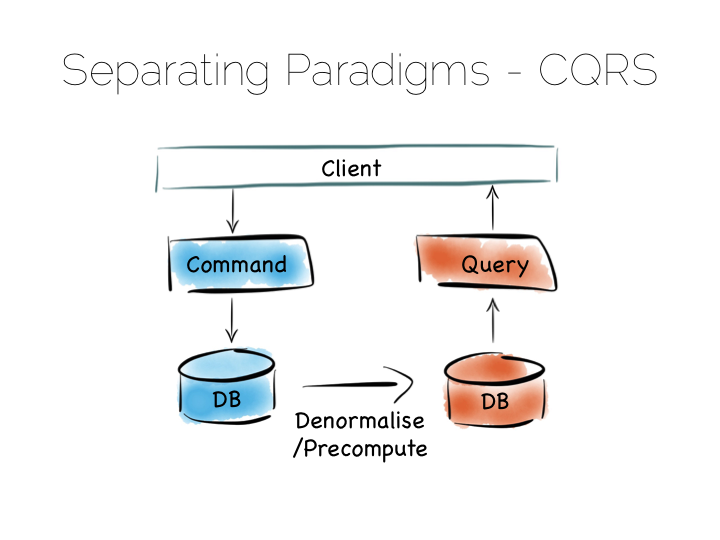

The simplest route out of this is CQRS (Command Query Responsibility Segregation).

Another very simple idea. We separate read and write workloads. Writes go into something write-optimised. Something closer to a simple journal file. Reads come from something read-optimised. There are many ways to do this, be it tools like Goldengate for relational technologies or products that integrate replication internally such as Replica Sets in MongoDB.

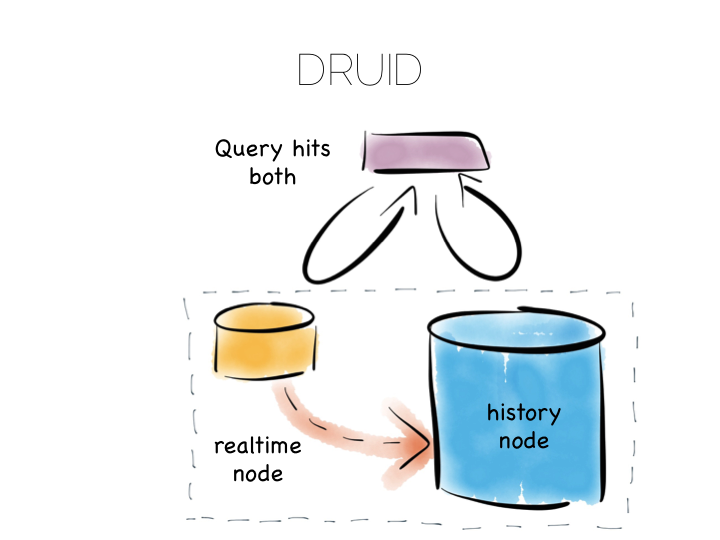

Many databases do something like this under the hood. Druid is a nice example. Druid is an open source, distributed, time-series, columnar analytics engine. Columnar storage works best if we input data in large blocks, as the data must be spread across many files. To get good write performance Druid stores recent data in a write optimised store. This is gradually ported over to the read optimised store over time.

When Druid is queried the query routes to both the write optimised and read optimised components. The results are combined (‘reduced’) and returned to the user. Druid uses time, marked on each record, to determine ordering.

Composite approaches like this provide the benefits of CQRS behind a single abstraction.

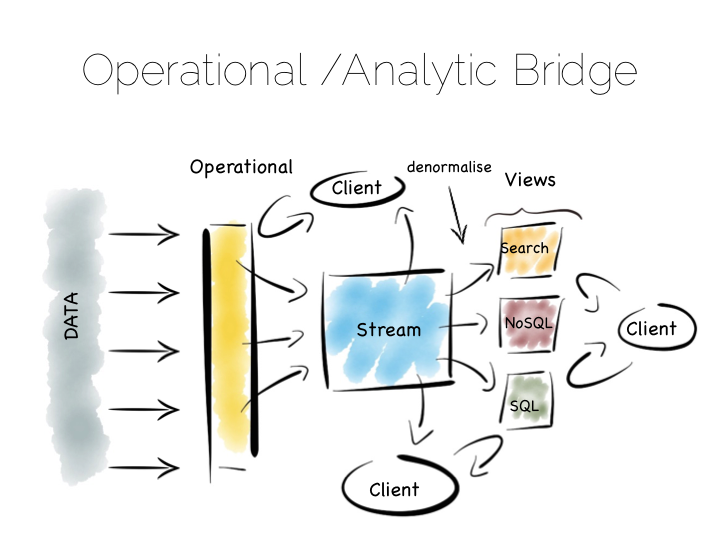

Another similar approach is to use an Operational/Analytic Bridge. Read- and write-optimised views are separated using an event stream. The stream of state is retained indefinitely, so that the async views can be recomposed and augmented at a later date by replaying.

So the front section provides for synchronous reads and writes. This can be as simple as immediately reading data that was written or as complex as supporting ACID transactions.

The back end leverages asynchronicity, and the advantages of immutable state, to scale offline processing through replication, denormalisation or even completely different storage engines. The messaging-bridge, along with joining the two, allows applications to listen to the data flowing through the platform.

As a pattern this is well suited to mid-sized deployments where there is at least a partial, unavoidable requirement for a mutable view.

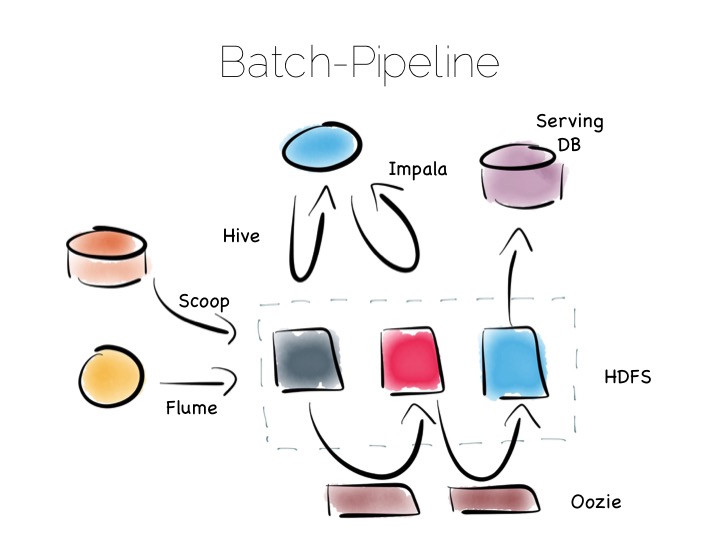

If we are designing for an immutable world, it’s easier to embrace larger data sets and more complex analytics. The batch pipeline, one almost ubiquitously implemented with the Hadoop stack, is typical of this.

The beauty of the Hadoop stack comes from it’s plethora of tools. Whether you want fast read-write access, cheap storage, batch processing, high throughput messaging or tools for extracting, processing and analysing data, the Hadoop ecosystem has it all.

The batch pipeline architecture pulls data from pretty much any source, push or pull. Ingests it into HDFS then processes it to provide increasingly optimised versions of the original data. Data might be enriched, cleansed, denormalised, aggregated, moved to a read optimised format such as Parquet or loaded into a serving layer or data mart. Data can be queried and processed throughout this process.

This architecture works well for immutable data, ingested and processed in large volume. Think 100’s of TBs (although size alone isn’t a great metric). The evolution of this architecture will be slow though. Straight-through timings are often measured in hours.

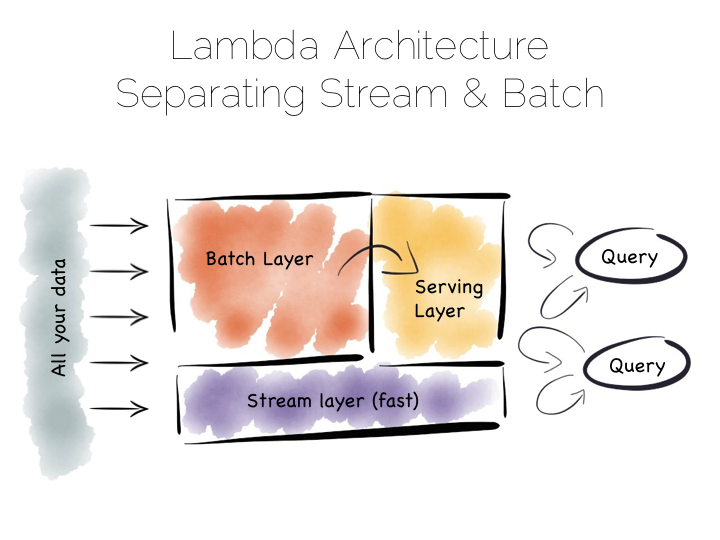

The problem with the Batch Pipeline is that we often don’t want to wait hours to get a result. A common solution is to add a streaming layer aside it. This is sometimes referred to as the Lambda Architecture.

The Lambda Architecture retains a batch pipeline, like the one above, but it circumvents it with a fast streaming layer. It’s a bit like building a bypass around a busy town. The streaming layer typically uses a streaming processing tool such as Storm or Samza.

The key insight of the Lambda Architecture is that we’re often happy to have an approximate answer quickly, but we would like an accurate answer in the end.

So the streaming layer bypasses the batch layer providing the best answers it can within a streaming window. These are written to a serving layer. Later the batch pipeline computes an accurate data and overwrites the approximation.

This is a clever way to balance accuracy with responsiveness. Some implementations of this pattern suffer if the two branches end up being dual coded in stream and batch layers. But it is often possible to simply abstract this logic into common libraries that can be reused, particularly as much of this processing is often written in external libraries such as Python or R anyway. Alternatively systems like Spark provide both stream and batch functionality in one system (although the streams in Spark are really micro-batches).

So this pattern again suits high volume data platforms, say in the 100TB range, that want to combine streams with existing, rich, batch based analytic function.

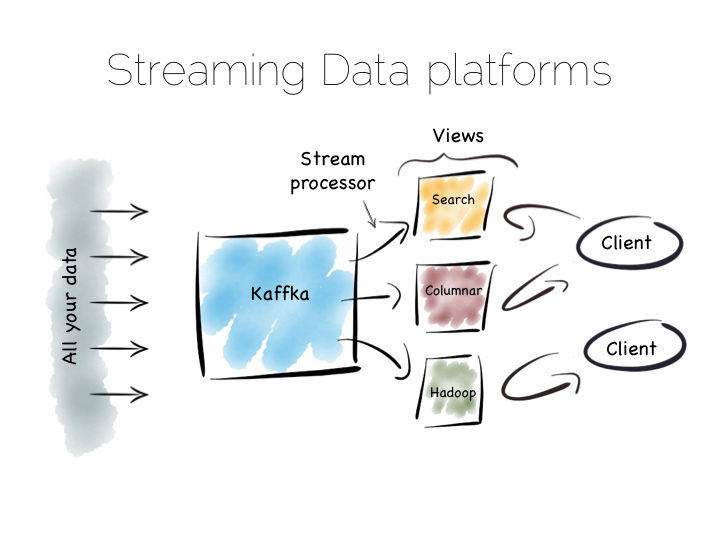

There is another approach to this problem of slow data pipelines. It’s sometimes termed the Kappa architecture. I actually thought this name was ‘tongue in cheek’ but I’m now not so sure. Whichever it is, I’m going to use the term Stream Data Platform, which is a term in use also.

Stream Data Platform’s flip the batch pattern on its head. Rather than storing data in HDFS, and refining it with incremental batch jobs, the data is stored in a scale out messaging system, or log, such as Kafka. This becomes the system of record and the stream of data is processed in real time to create a set of tertiary views, indexes, serving layers or data marts.

This is broadly similar to the streaming layer of the Lambda architecture but with the batch layer removed. Obviously the requirement for this is that the messaging layer can store and vend very large volumes of data and there is a sufficiently powerful stream processor to handle the processing.

There is no free lunch so, for hard problems, Stream Data Platform’s will likely run no faster than an equivalent batch system, but switching the default approach from ‘store and process’ to ‘stream and process’ can provide greater opportunity for faster results.

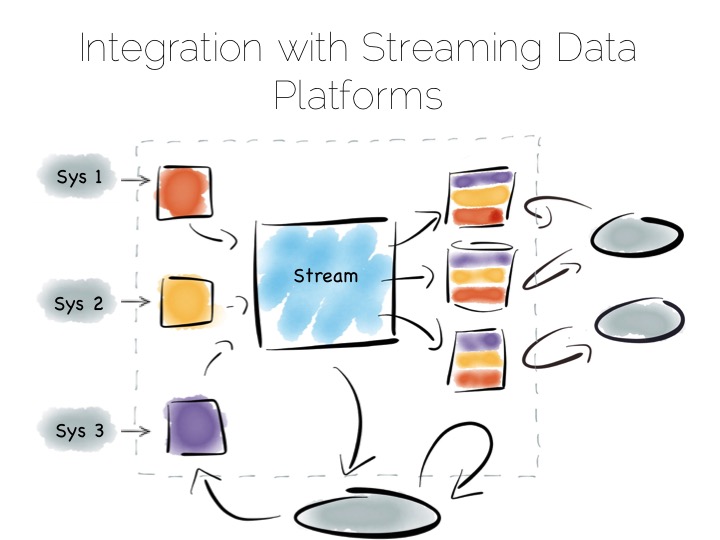

Finally, the Stream Data Platform approach can be applied to the problem of ‘application integration’. This is a thorny and difficult problem that has seen focus from big vendors such as Informatica, Tibco and Oracle for many years. For the most part results have been beneficial, but not transformative. Application integration remains a topic looking for a real workable solution.

Stream Data Platform’s provide an interesting potential solution to this problem. They take many of the benefits of an O/A bridge – the variety of asynchronous storage formats and ability to recreate views – but leave the consistency requirement isolated in, often existing sources:

With the system of record being a log it’s easy to enforce immutability. Products like Kafka can retain enough volume and throughput, internally, to be used as a historic record. This means recovery can be a process of replaying and regenerating state, rather than constantly checkpointing.

Similarly styled approaches have been taken before in a number of large institutions with tools such as Goldengate, porting data to enterprise data warehouses or more recently data lakes. They were often thwarted by a lack of throughput in the replication layer and the complexity of managing changing schemas. It seems unlikely the first problem will continue. As for the later problem though, the jury is still out.

~

So we started with locality. With sequential addressing for both reads and writes. This dominates the tradeoffs inside the components we use. We looked at scaling these components out, leveraging primitives for both sharding and replication. Finally we rebranded consistency as a problem we should isolate in the platforms we build.

But data platforms themselves are really about balancing the sweet-spots of these individual components within a single, holistic form. Incrementally restructuring. Migrating the write-optimised to the read-optimised. Moving from the constraints of consistency to the open plains of streamed, asynchronous, immutable state.

This must be done with a few things in mind. Schemas are one. Time, the peril of the distributed, asynchronous world, is another. But these problems are manageable if carefully addressed. Certainly the future is likely to include more of these things, particularly as tooling, innovated in the big data space, percolates into platforms that address broader problems, both old and new.

~

THINGS WE LIKE

Upside Down Databases: Bridging the Operational and Analytic Worlds with Streams

Tuesday, April 7th, 2015

Remember the days when people would write entire applications, embedded inside a database? It seems a bit crazy now when you think about it. Imagine writing an entire application in SQL. I worked on a beast like that, very briefly, in the late 1990s. It had a few shell scripts but everything else was SQL. Everything. Suffice to say it wasn’t much fun – you can probably imagine – but there was a slightly perverse simplicity to the whole thing.

So Martin Kleppmann did a talk recently around the idea of turning databases inside out. I like this idea. It’s a nice way to frame a problem that has lurked unresolved for years. To paraphrase somewhat… databases do very cool stuff: caching, indexes, replication, materialised views. These are very cool things. They do them well too. It’s a shame that they’re locked in a world dislocated from general consumer programs.

There are also a few things missing, like databases don’t really do events, streams, messaging, whatever you want to call it. Some newer ones do, but none cover what you might call ‘general purpose’ streams. This means the query-driven paradigm often leaks into the application space. Applications end up circling around centralised mutable state. Whilst there are valid use cases for this, the rigid and synchronous world produced can be counterproductive for many types of programs.

So it’s interesting to look at the pros and cons of externalising caches, indexes, materialised views and asynchronous streams of state. I got to see some of these ideas play out in a data platform built for a large financial institution. It used messaging as the system of record. It also employed synchronous and asynchronous views. These could be generated, and regenerated, from this event stream.

The approach had some nice side effects which we didn’t originally anticipate. Making the system of record an event stream was actually born somewhat from necessity. The front of the system used a data grid as a consolidation point. Data grids need external persistence. We wanted the back end to support analytics. Analytic systems work best when writes are batched, so we needed something to buffer the two. Something that would scale out disk writes linearly with the sharded data grid. Topic based messaging seemed like a good fit. Clients needed notifications anyway.

This led to some interesting properties. The front end provided a near term, consistent view. Clients could collaborate around it. It could be scaled out horizontally by adding shards. At the back, everything was asynchronous and immutable. This meant it was easy to scale with replicas. Creating another replica is relatively simple when your system of record is an event stream.

The event stream tied the two together. It was the bridge between the operational and analytic sections. A stream for clients to fork directly or as a firehose to create another view.